TorWIC-Mapping

Notes on the Dataset

- The different epochs have no chronological order, but are grouped according to the type of changes.

- Different epochs can be stitched together to create different, changing environments.

- The changes are not annotated in the point cloud, but exist in the form of maps per epoch.

- The semantic labels are predicted from the RGB frame and are, therefore, not perfect.

- The "Calibration" folder, which should contain the camera intrinsics, is empty. However, in `utils/calibration.py` of the accompanying source code, the intrinsics appear to be hardcoded.

- The depth images are quite noisy for farther away surfaces. When thresholding the depth values, the point cloud reconstructions are of significant better quality.

- For the segmentation images of Scenario_1-1, the file names have six digits instead of four (as is the case for all other epochs).

Detailed Information

| General Information | |

| Name | TorWIC-Mapping |

| Release Year | 2022 |

| Terms of Use | Unclear (Citation) |

| Access Requirements | None |

| Dataset Size | 69.7 GB | Partial download is possible, i.e. the data is split into several files (e.g., epochs or data types) |

| Documentation | In-depth documentation of acquisition and characteristics of the dataset, e.g., via an explicit dataset paper or a comprehensive multi-page metadata document |

| Code | Yes |

| Applications | Long-term localization and mapping | Built environment change detection and classification |

| Detailed Applications | Long-term mapping | object-level change detection |

| Acquisition | |

| Number of Scenes | 1 |

| Number of Epochs per Scene (minimum) | 18 |

| Number of Epochs per Scene (median) | 18 |

| Number of Epochs per Scene (maximum) | 18 |

| General Scene Type | Indoor |

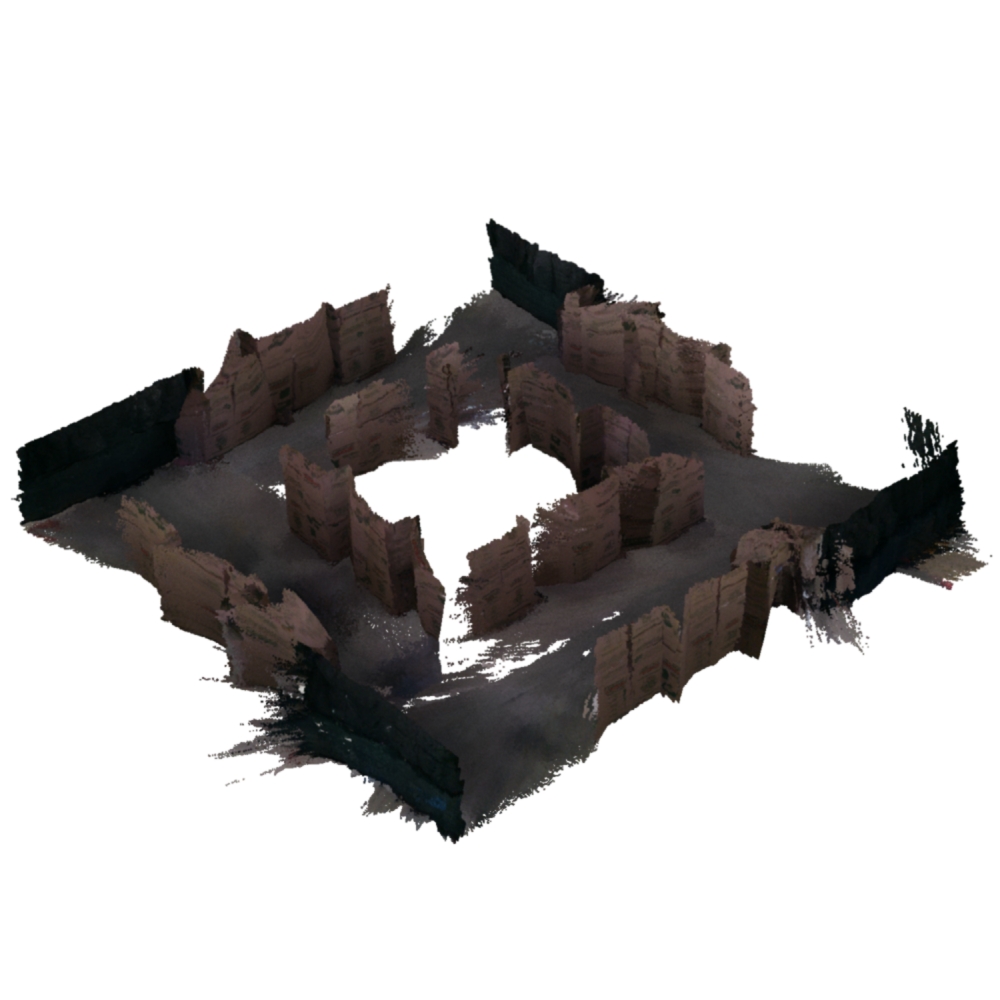

| Specific Scene Type | Boxes and fences |

| Location | Toronto (Canada) |

| Acquisition Type | Depth camera mounted on vehicle |

| Acquisition Device | RealSense D435i RGB-D camera |

| Acquisition Platform | OTTO 100 robot |

| Scan Interval | Minutes | Educated guess by the authors |

| Acquisition Months | Janurary: 0 February: 18 March: 0 April: 0 May: 0 June: 0 July: 0 August: 0 September: 0 October: 0 November: 0 December: 0 |

| Representation | |

| Data Representation | Structured, local (e.g., RGBD or range images with poses (and intrinsics) |

| Specific Data Representation | Color and depth images |

| File Format/Encoding | PNG |

| Raw Data | ROS bags |

| Additional Data | 2D LiDAR |

| Coordinate System | m |

| Quality and Usability | |

| Registration | Coarsely registered |

| Number of Partial Epochs | - |

| Unusable Data Reason | - |

| Splits | No |

| Per-Point Attributes | |

| Intensity/Reflectivity | No |

| Color | Yes |

| Semantic Labels | 16 |

| Instance Labels | No |

| Change Labels | - |

| Statistics | |

| Number of Points per Epoch (minimum) | 135M |

| Number of Points per Epoch (median) | 314M |

| Number of Points per Epoch (maximum) | 488M |

| Avg. Point Spacing (minimum) | 976µm |

| Avg. Point Spacing (median) | 1.3mm |

| Avg. Point Spacing (maximum) | 1.7mm |

| Avg. Change Points per Epoch | - |

Paper Reference 1

@inproceedings{qian2022datasettorwicmapping,

author = {Jingxing Qian and Veronica Chatrath and Jun Yang and James Servos and Angela P. Schoellig and Steven L. Waslander},

title = {POCD: Probabilistic Object-Level Change Detection

and Volumetric Mapping in Semi-Static Scenes},

booktitle = {Proc. Robotics: Science and Systems XVIII},

year = {2022},

doi = {10.15607/RSS.2022.XVIII.013},

}